Answered step by step

Verified Expert Solution

Question

1 Approved Answer

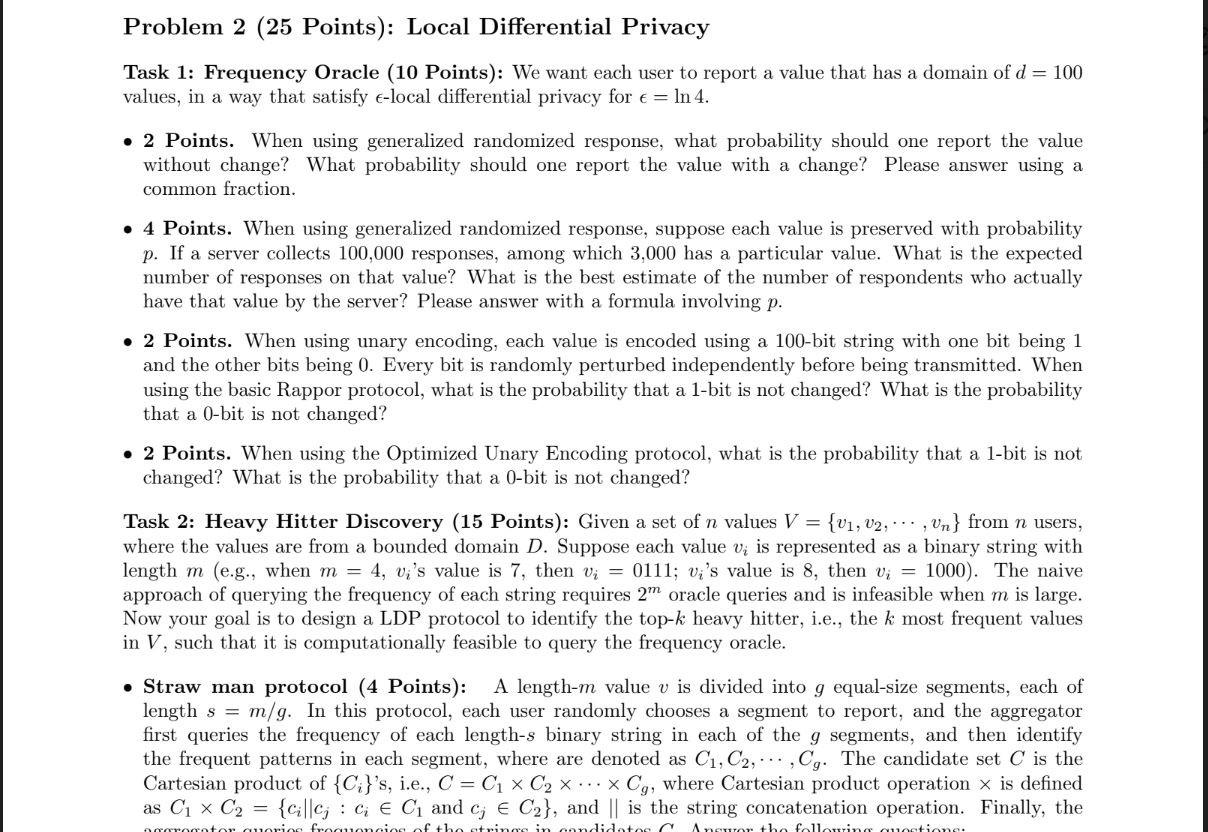

Task 1: Frequency Oracle (10 Points): We want each user to report a value that has a domain of d=100 values, in a way that

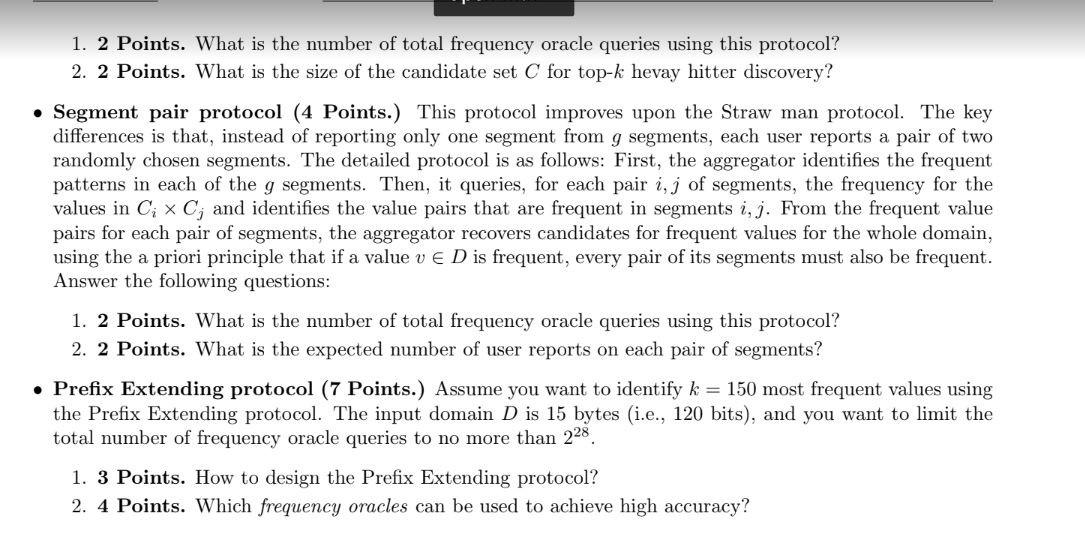

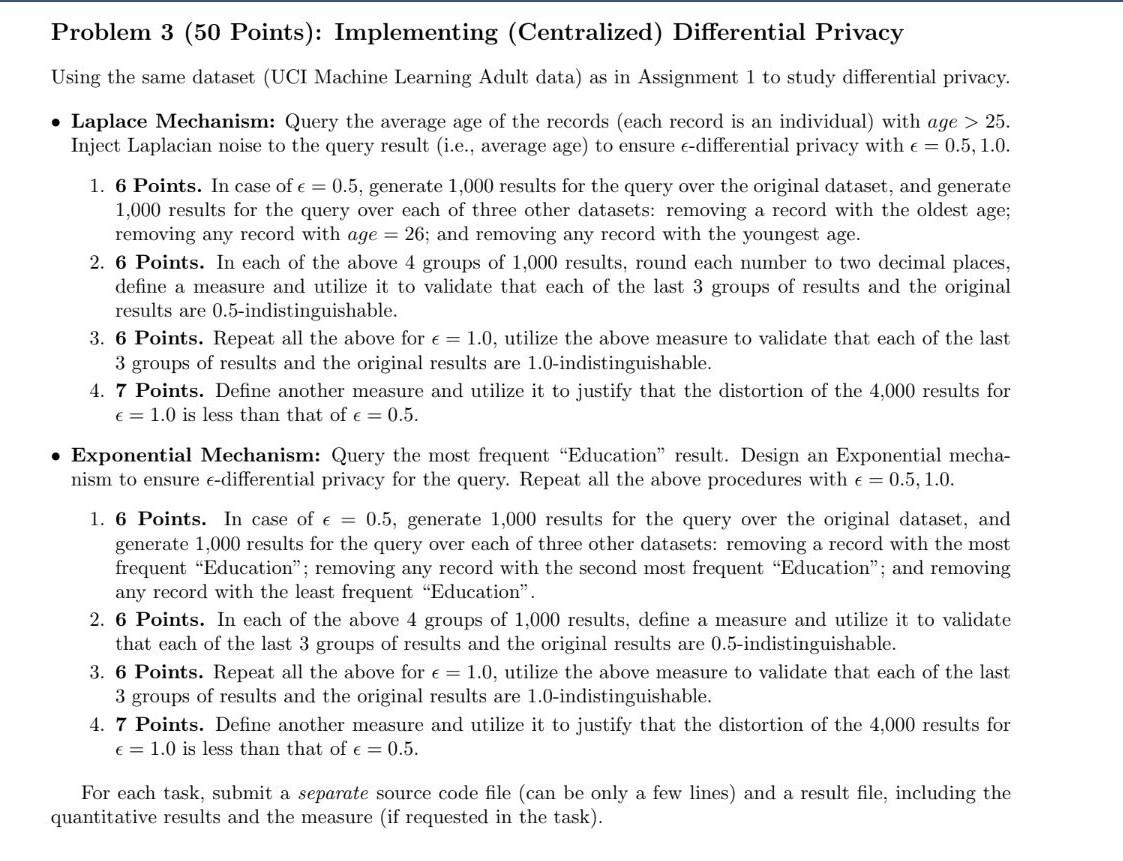

Task 1: Frequency Oracle (10 Points): We want each user to report a value that has a domain of d=100 values, in a way that satisfy -local differential privacy for =ln4. - 2 Points. When using generalized randomized response, what probability should one report the value without change? What probability should one report the value with a change? Please answer using a common fraction. 4 Points. When using generalized randomized response, suppose each value is preserved with probability p. If a server collects 100,000 responses, among which 3,000 has a particular value. What is the expected number of responses on that value? What is the best estimate of the number of respondents who actually have that value by the server? Please answer with a formula involving p. - 2 Points. When using unary encoding, each value is encoded using a 100-bit string with one bit being 1 and the other bits being 0 . Every bit is randomly perturbed independently before being transmitted. When using the basic Rappor protocol, what is the probability that a 1-bit is not changed? What is the probability that a 0 -bit is not changed? - 2 Points. When using the Optimized Unary Encoding protocol, what is the probability that a 1-bit is not changed? What is the probability that a 0-bit is not changed? Task 2: Heavy Hitter Discovery (15 Points): Given a set of n values V={v1,v2,,vn} from n users, where the values are from a bounded domain D. Suppose each value vi is represented as a binary string with length m (e.g., when m=4,vi 's value is 7 , then vi=0111;vi 's value is 8 , then vi=1000 ). The naive approach of querying the frequency of each string requires 2m oracle queries and is infeasible when m is large. Now your goal is to design a LDP protocol to identify the top- k heavy hitter, i.e., the k most frequent values in V, such that it is computationally feasible to query the frequency oracle. - Straw man protocol (4 Points): A length- m value v is divided into g equal-size segments, each of length s=m/g. In this protocol, each user randomly chooses a segment to report, and the aggregator first queries the frequency of each length-s binary string in each of the g segments, and then identify the frequent patterns in each segment, where are denoted as C1,C2,,Cg. The candidate set C is the Cartesian product of {Ci} 's, i.e., C=C1C2Cg, where Cartesian product operation is defined as C1C2={cicj:ciC1 and cjC2}, and is the string concatenation operation. Finally, the 1. 2 Points. What is the number of total frequency oracle queries using this protocol? 2. 2 Points. What is the size of the candidate set C for top- k hevay hitter discovery? - Segment pair protocol (4 Points.) This protocol improves upon the Straw man protocol. The key differences is that, instead of reporting only one segment from g segments, each user reports a pair of two randomly chosen segments. The detailed protocol is as follows: First, the aggregator identifies the frequent patterns in each of the g segments. Then, it queries, for each pair i,j of segments, the frequency for the values in CiCj and identifies the value pairs that are frequent in segments i,j. From the frequent value pairs for each pair of segments, the aggregator recovers candidates for frequent values for the whole domain, using the a priori principle that if a value vD is frequent, every pair of its segments must also be frequent. Answer the following questions: 1. 2 Points. What is the number of total frequency oracle queries using this protocol? 2. 2 Points. What is the expected number of user reports on each pair of segments? - Prefix Extending protocol (7 Points.) Assume you want to identify k=150 most frequent values using the Prefix Extending protocol. The input domain D is 15 bytes (i.e., 120 bits), and you want to limit the total number of frequency oracle queries to no more than 228. 1. 3 Points. How to design the Prefix Extending protocol? 2. 4 Points. Which frequency oracles can be used to achieve high accuracy? Problem 3 (50 Points): Implementing (Centralized) Differential Privacy Using the same dataset (UCI Machine Learning Adult data) as in Assignment 1 to study differential privacy. - Laplace Mechanism: Query the average age of the records (each record is an individual) with age >25. Inject Laplacian noise to the query result (i.e., average age) to ensure -differential privacy with =0.5,1.0. 1. 6 Points. In case of =0.5, generate 1,000 results for the query over the original dataset, and generate 1,000 results for the query over each of three other datasets: removing a record with the oldest age; removing any record with age =26; and removing any record with the youngest age. 2. 6 Points. In each of the above 4 groups of 1,000 results, round each number to two decimal places, define a measure and utilize it to validate that each of the last 3 groups of results and the original results are 0.5-indistinguishable. 3. 6 Points. Repeat all the above for =1.0, utilize the above measure to validate that each of the last 3 groups of results and the original results are 1.0-indistinguishable. 4. 7 Points. Define another measure and utilize it to justify that the distortion of the 4,000 results for =1.0 is less than that of =0.5. - Exponential Mechanism: Query the most frequent "Education" result. Design an Exponential mechanism to ensure -differential privacy for the query. Repeat all the above procedures with =0.5,1.0. 1. 6 Points. In case of =0.5, generate 1,000 results for the query over the original dataset, and generate 1,000 results for the query over each of three other datasets: removing a record with the most frequent "Education"; removing any record with the second most frequent "Education"; and removing any record with the least frequent "Education". 2. 6 Points. In each of the above 4 groups of 1,000 results, define a measure and utilize it to validate that each of the last 3 groups of results and the original results are 0.5-indistinguishable. 3. 6 Points. Repeat all the above for =1.0, utilize the above measure to validate that each of the last 3 groups of results and the original results are 1.0-indistinguishable. 4. 7 Points. Define another measure and utilize it to justify that the distortion of the 4,000 results for =1.0 is less than that of =0.5. For each task, submit a separate source code file (can be only a few lines) and a result file, including the quantitative results and the measure (if requested in the task)

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started