Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Task 2 : Reinforcement Learning Q - Learning with Smart Taxi ( Self - Driving Cab ) . In the lab, you have been asked

Task : Reinforcement Learning

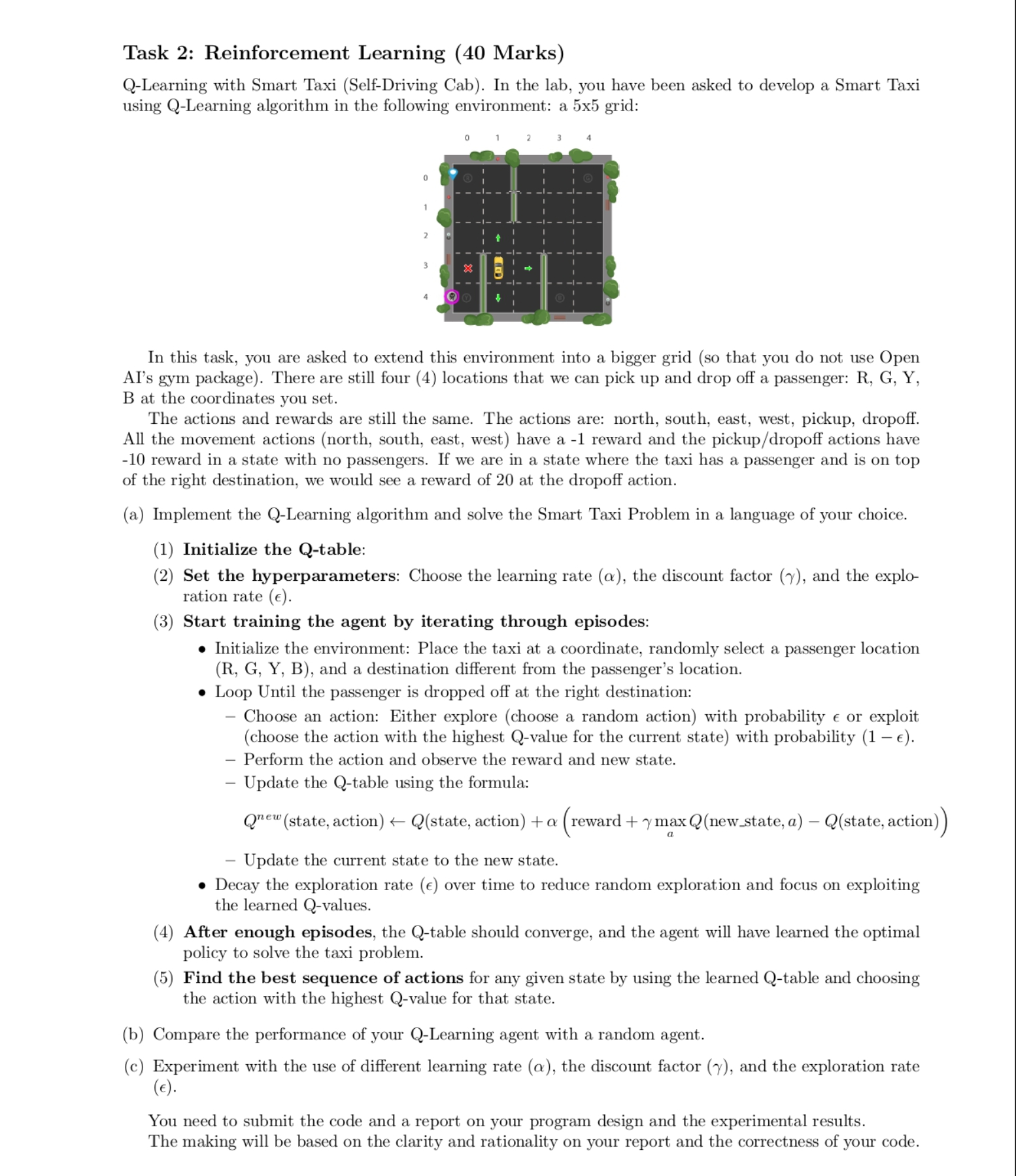

QLearning with Smart Taxi SelfDriving Cab In the lab, you have been asked to develop a Smart Taxi using QLearning algorithm in the following environment: a x grid:

In this task, you are asked to extend this environment into a bigger grid so that you do not use Open AIs gym package There are still four locations that we can pick up and drop off a passenger: R G YB at the coordinates you set.

The actions and rewards are still the same. The actions are: north, south, east, west, pickup, dropoff.

All the movement actions north south, east, west have a reward and the pickupdropoff actions have reward in a state with no passengers. If we are in a state where the taxi has a passenger and is on top of the right destination, we would see a reward of at the dropoff action.

a Implement the QLearning algorithm and solve the Smart Taxi Problem in a language of your choice.

Initialize the Qtable:

Set the hyperparameters: Choose the learning rate alpha the discount factor gamma and the exploration rate epsi

Start training the agent by iterating through episodes:

Initialize the environment: Place the taxi at a coordinate, randomly select a passenger location R G Y B and a destination different from the passengers location.

Loop Until the passenger is dropped off at the right destination:

Choose an action: Either explore choose a random action with probability epsi or exploit choose the action with the highest Qvalue for the current state with probability epsi

Perform the action and observe the reward and new state.

Update the Qtable using the formula:

Qnewstate action Qstate actionalpha reward gamma max a Qnew state, a Qstate action

Update the current state to the new state.

Decay the exploration rate epsi over time to reduce random exploration and focus on exploiting the learned Qvalues.

After enough episodes, the Qtable should converge, and the agent will have learned the optimal policy to solve the taxi problem.

Find the best sequence of actions for any given state by using the learned Qtable and choosing the action with the highest Qvalue for that state.

b Compare the performance of your QLearning agent with a random agent.

c Experiment with the use of different learning rate alpha the discount factor gamma and the exploration rate epsi

You need to submit the code and a report on your program design and the experimental results.

The making will be based on the clarity and rationality on your report and the correctness of your code.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started