Question: The figure below shows a Markov chain, which is defined by its states and transition probabilities. A Markov chain is what we get by

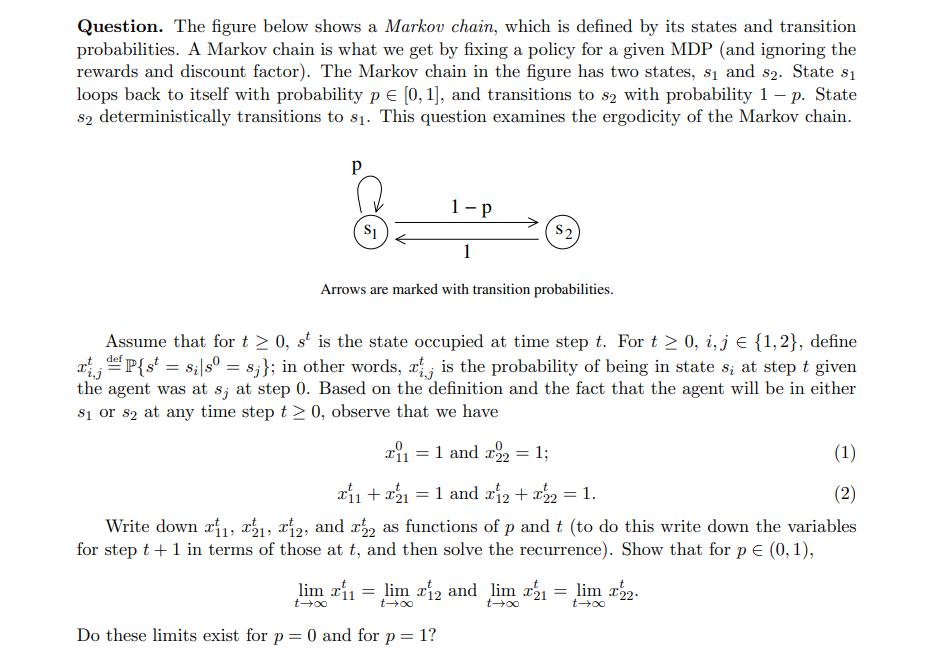

The figure below shows a Markov chain, which is defined by its states and transition probabilities. A Markov chain is what we get by fixing a policy for a given MDP (and ignoring the rewards and discount factor). The Markov chain in the figure has two states, s and 82. State 81 loops back to itself with probability p = [0, 1], and transitions to s2 with probability 1 - p. State 82 deterministically transitions to $. This question examines the ergodicity of the Markov chain. P 1-P 1 Arrows are marked with transition probabilities. $1 Assume that for t 0, st is the state occupied at time step t. For t 0, i, j = {1,2}, define x = P{st = si|s0 = 8;}; in other words, at is the probability of being in state s; at step t given the agent was at s; at step 0. Based on the definition and the fact that the agent will be in either 81 or 82 at any time step t 0, observe that we have Tii = 1 and 222 = 1; (1) (2) + x = 1 and x2 + x = 1. Write down , 1, 2, and 2 as functions of p and t (to do this write down the variables for step t + 1 in terms of those at t, and then solve the recurrence). Show that for p = (0,1), lim rii = lim 12 and t-x t Do these limits exist for p = 0 and for p = 1? 52 lim 221 t = lim 22.

Step by Step Solution

3.47 Rating (157 Votes )

There are 3 Steps involved in it

The detailed ... View full answer

Get step-by-step solutions from verified subject matter experts