Answered step by step

Verified Expert Solution

Question

1 Approved Answer

use the code provided to help answer the question intercept and slope, respectively, of the line from which we generate our data. We'll generate a

use the code provided to help answer the question

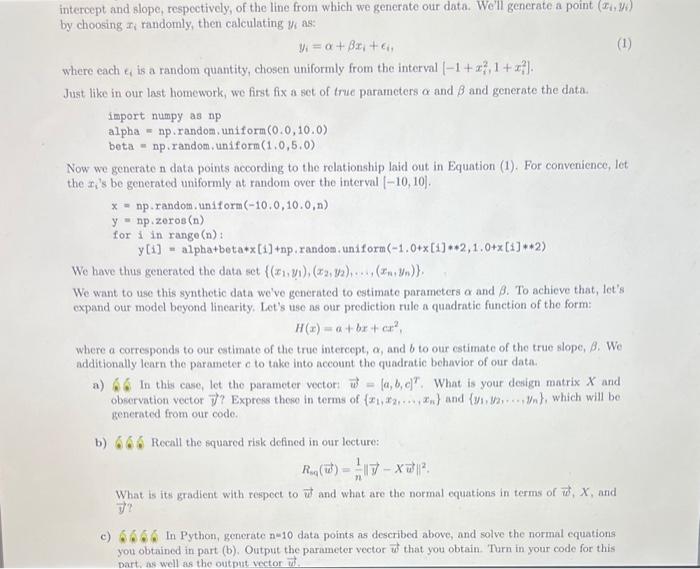

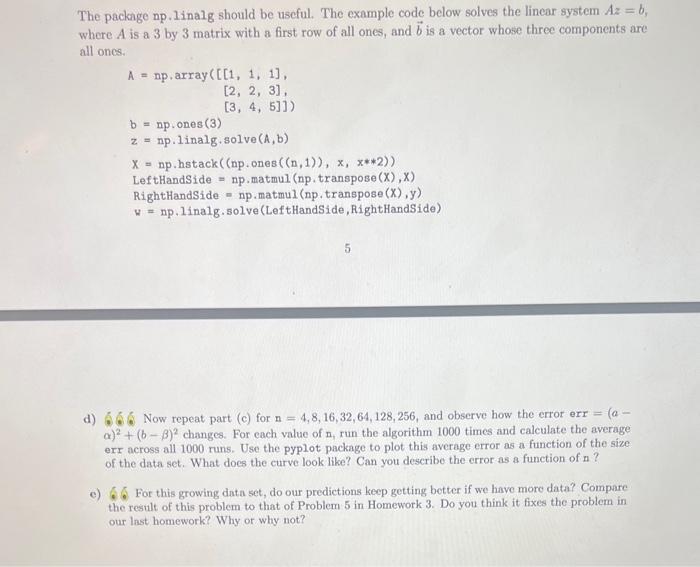

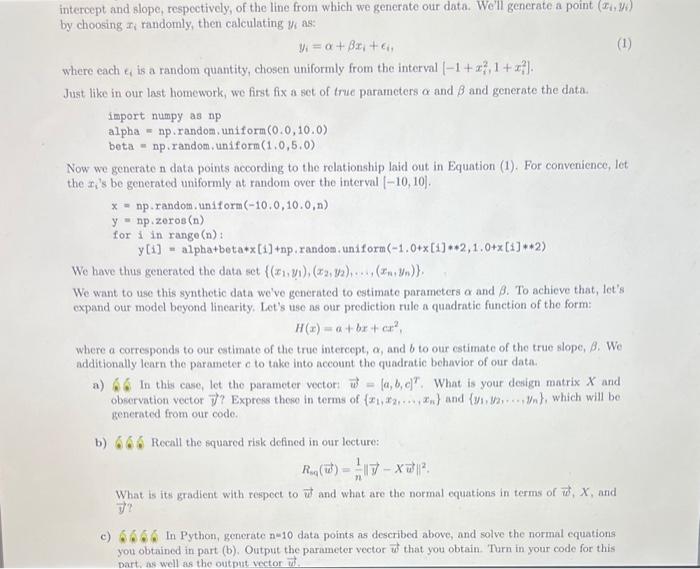

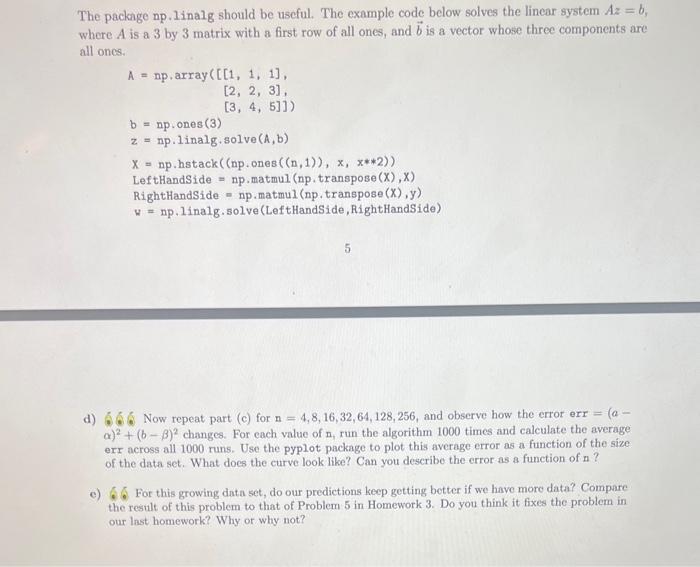

intercept and slope, respectively, of the line from which we generate our data. We'll generate a point (xi,yi) by choosing xi tandomly, then calculating yi as: yi=+xi+i where each i is a random quantity, chosen uniformly from the interval [1+xi2,1+xi2]. Just like in our last homework, we first fix a set of true parameters and and generate the data. import numpy as np alpha = np.random, uniform (0,0,10.0) beta = np.random, uniform (1,0,5,0) Now we generate n data points according to the relationship laid out in Equation (1). For convenience, let the xi 's be generated uniformly at random over the interval [10,10]. x=np.randotm,uniform(10,0,10,0,n)y=np.zoros(n)forinrange(n):y[1]=alpha+beta*x[1]+np,randos.uniform(1,0+x[1]+2,1,0+x[1]+2) We have thus generated the data set {(x1,y1),(x2,y2),,(xn,yn)}, We want to use this synthetic data we've generated to estimate parameters and . To achieve that, let's expand our model beyond linearity. Let's use as our prediction rule a quadratic function of the form: H(x)=a+bx+c2, where a corresponds to our estimate of the true intercept, , and b to our estimate of the true slope, . We additionally learn the parameter o to take into account the quadratic behavior of our data. a) 6 In thas case, let the parameter vector: w=[a,b,c]T. What is your design matrix X and obeervation vector v ? Express these in terms of {x1,x2,,xn} and {y1,y2,,yn}, which will be generated from our code. b) 6.6 Recall the squared risk defined in our lecture: R6oq(w)=n1yXw2. What is its gradient with respect to w and what are the normal equations in terms of w,X, and i)? c) S. . . In Python, generate n=10 data points as described above, and solve the normal equations you obtained in part (b). Output the parameter vector w that you obtain. Turn in your code for this part, as well as the output vector u. The package np.linalg should be uscful. The example code below solves the linear system Az=bs where A is a 3 by 3 matrix with a first row of all ones, and b is a vector whose three components are all ones. A=np,array([[1,1,1],[2,2,3],[3,4,5]])b=np.ones(3)z=nplinalgsolve(A,b)X=nphstack((npones((n,1)),x,x2))LeftHandSide=npmatmul(np,transpose(X),X)RightHandSide=np.matmul(np.transpose(X),y)u=np.1inalg.solve(LeftHandSide,RightHandSide) d) 6.6. Now repeat part (c) for n=4,8,16,32,64,128,256, and observe how the error err =(a err across all 1000 runs. Use the pyplot package to plot this average error as a function of the size of the data set. What does the curve look like? Can you describe the error as a function of n ? e) 6.6 For this growing data set, do our predictions keep getting better if we have more data? Compare the result of this problem to that of Problem 5 in Homework 3 . Do you think it fixes the problem in our last homework? Why or why not? intercept and slope, respectively, of the line from which we generate our data. We'll generate a point (xi,yi) by choosing xi tandomly, then calculating yi as: yi=+xi+i where each i is a random quantity, chosen uniformly from the interval [1+xi2,1+xi2]. Just like in our last homework, we first fix a set of true parameters and and generate the data. import numpy as np alpha = np.random, uniform (0,0,10.0) beta = np.random, uniform (1,0,5,0) Now we generate n data points according to the relationship laid out in Equation (1). For convenience, let the xi 's be generated uniformly at random over the interval [10,10]. x=np.randotm,uniform(10,0,10,0,n)y=np.zoros(n)forinrange(n):y[1]=alpha+beta*x[1]+np,randos.uniform(1,0+x[1]+2,1,0+x[1]+2) We have thus generated the data set {(x1,y1),(x2,y2),,(xn,yn)}, We want to use this synthetic data we've generated to estimate parameters and . To achieve that, let's expand our model beyond linearity. Let's use as our prediction rule a quadratic function of the form: H(x)=a+bx+c2, where a corresponds to our estimate of the true intercept, , and b to our estimate of the true slope, . We additionally learn the parameter o to take into account the quadratic behavior of our data. a) 6 In thas case, let the parameter vector: w=[a,b,c]T. What is your design matrix X and obeervation vector v ? Express these in terms of {x1,x2,,xn} and {y1,y2,,yn}, which will be generated from our code. b) 6.6 Recall the squared risk defined in our lecture: R6oq(w)=n1yXw2. What is its gradient with respect to w and what are the normal equations in terms of w,X, and i)? c) S. . . In Python, generate n=10 data points as described above, and solve the normal equations you obtained in part (b). Output the parameter vector w that you obtain. Turn in your code for this part, as well as the output vector u. The package np.linalg should be uscful. The example code below solves the linear system Az=bs where A is a 3 by 3 matrix with a first row of all ones, and b is a vector whose three components are all ones. A=np,array([[1,1,1],[2,2,3],[3,4,5]])b=np.ones(3)z=nplinalgsolve(A,b)X=nphstack((npones((n,1)),x,x2))LeftHandSide=npmatmul(np,transpose(X),X)RightHandSide=np.matmul(np.transpose(X),y)u=np.1inalg.solve(LeftHandSide,RightHandSide) d) 6.6. Now repeat part (c) for n=4,8,16,32,64,128,256, and observe how the error err =(a err across all 1000 runs. Use the pyplot package to plot this average error as a function of the size of the data set. What does the curve look like? Can you describe the error as a function of n ? e) 6.6 For this growing data set, do our predictions keep getting better if we have more data? Compare the result of this problem to that of Problem 5 in Homework 3 . Do you think it fixes the problem in our last homework? Why or why not

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started