Answered step by step

Verified Expert Solution

Question

1 Approved Answer

We can easily extend the binary Logistic Regression model to handle multi-class classification. Let's assume we have K different classes, and posterior probability for

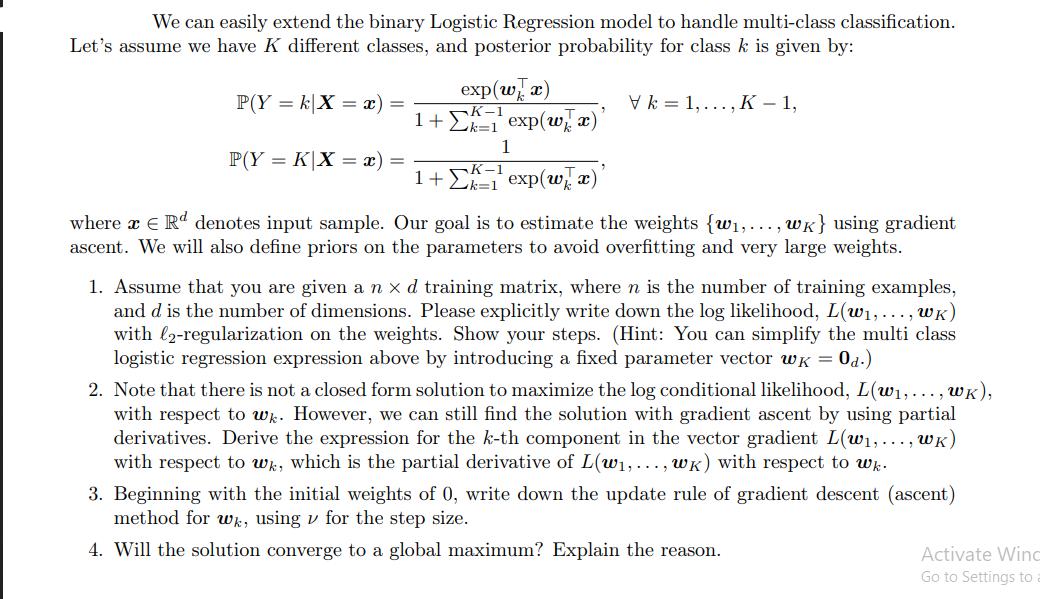

We can easily extend the binary Logistic Regression model to handle multi-class classification. Let's assume we have K different classes, and posterior probability for class k is given by: exp(wx) 1+= exp(wx)' P(Y k|X = x) P(Y K|X = x) = K-1 1 1+exp(wx) Vk 1,..., K-1, where x = Rd denotes input sample. Our goal is to estimate the weights {w1,..., wk} using gradient ascent. We will also define priors on the parameters to avoid overfitting and very large weights. 1. Assume that you are given a n x d training matrix, where n is the number of training examples, and d is the number of dimensions. Please explicitly write down the log likelihood, L(w1,..., WK) with 2-regularization on the weights. Show your steps. (Hint: You can simplify the multi class logistic regression expression above by introducing a fixed parameter vector w = 0d.) 2. Note that there is not a closed form solution to maximize the log conditional likelihood, L(w,..., WK), with respect to wk. However, we can still find the solution with gradient ascent by using partial derivatives. Derive the expression for the k-th component in the vector gradient L(w,..., WK) with respect to wk, which is the partial derivative of L(w,..., WK) with respect to wk. 3. Beginning with the initial weights of 0, write down the update rule of gradient descent (ascent) method for wk, using for the step size. 4. Will the solution converge to a global maximum? Explain the reason. Activate Winc Go to Settings to a

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started