You are required to design and implement three crawlers, a buffer and a classifier in C/C++ on Linux (other languages are not allowed). Mutual exclusion and synchronization must be done with mutex and semaphore provided in libraries and .

CS3103: Operating Systems Spring 2021 Programming Assignment 2 1 Goals The purpose of this assignment is to help you: get familiar with multi-threaded programming using pthread get familiar with mutual exclusion using mutexes get familiar with synchronization using semaphores 2 Background Sentiment analysis, which is a powerful technique based on natural language processing, has a wide range of applications, including consumer reviews analysis, recommender system, political campaigning, stock speculation, etc. A sentiment analysis model requires a large text corpus, which consists of classified articles grabbed from the internet using web crawlers. In the simplest scenario, a text corpus can be built by two components: a web crawler and a classifier. The crawler browse through web pages and grabs articles from websites. The grabbed articles are stored in a buffer, from which the classifier processes articles and classifies them. Considering the complexity of modern websites, it usually takes a long time for a crawler to locate and grab an article from the web page. So, the speed of crawlers is usually too slow for the classifier. Thus, multiple crawlers would be a better choice. 3 Components and Requirements You are required to design and implement three crawlers, a buffer and a classifier in C/C++ on Linux (other languages are not allowed). Mutual exclusion and synchronization must be done with mutex and semaphore provided in libraries

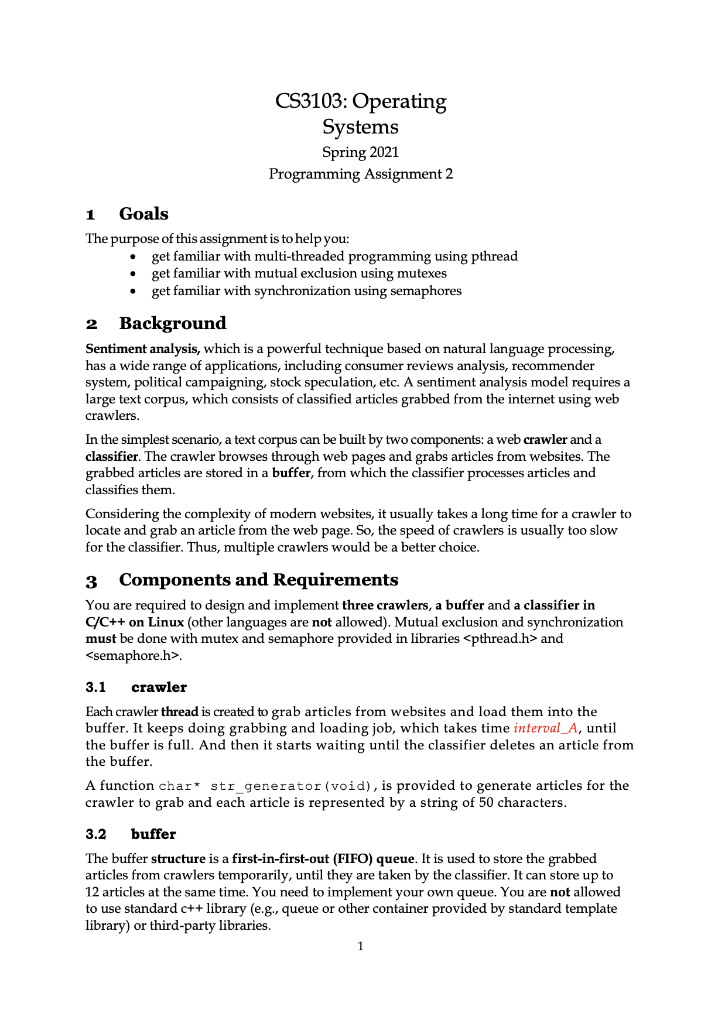

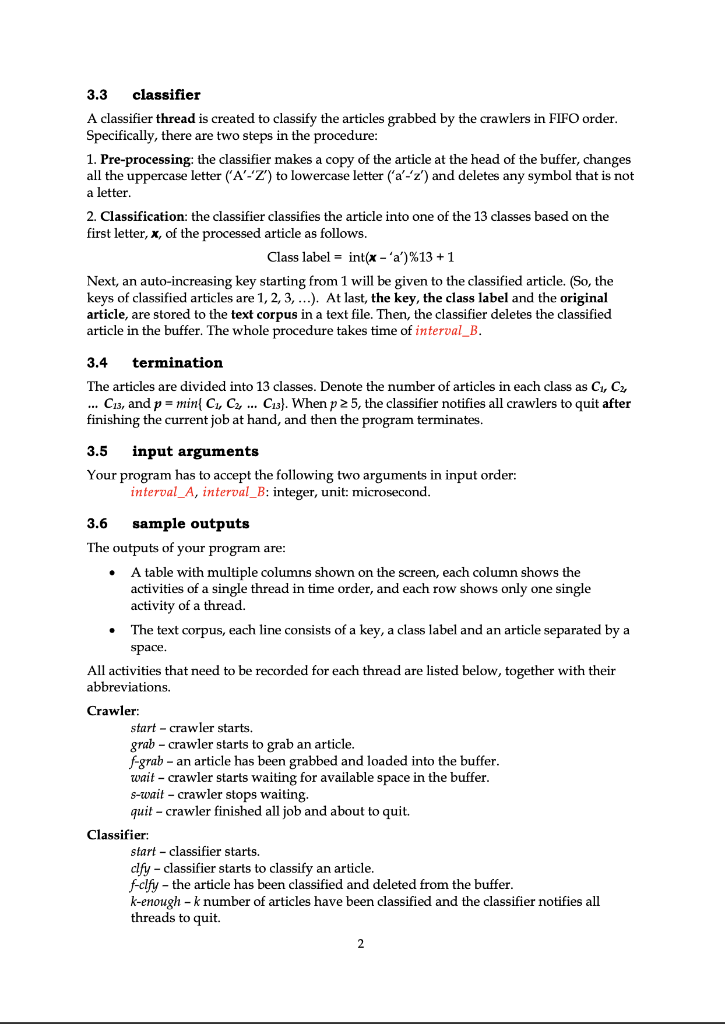

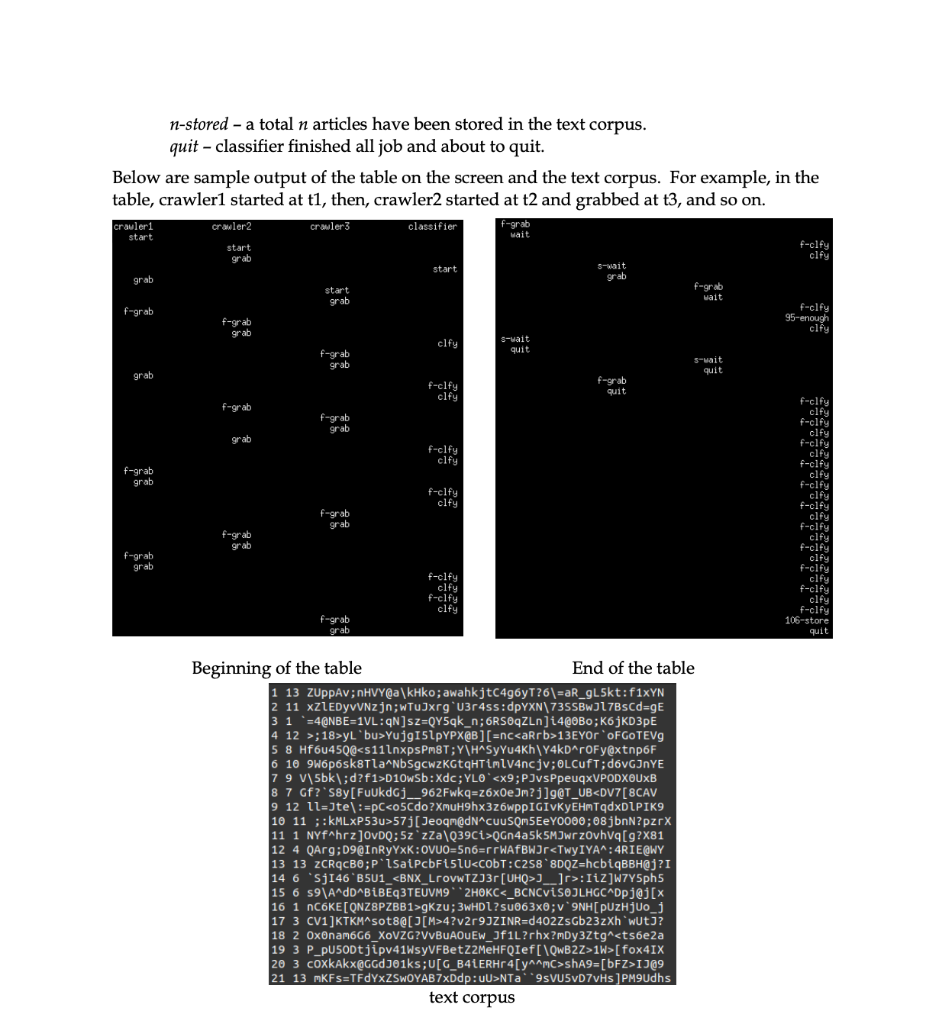

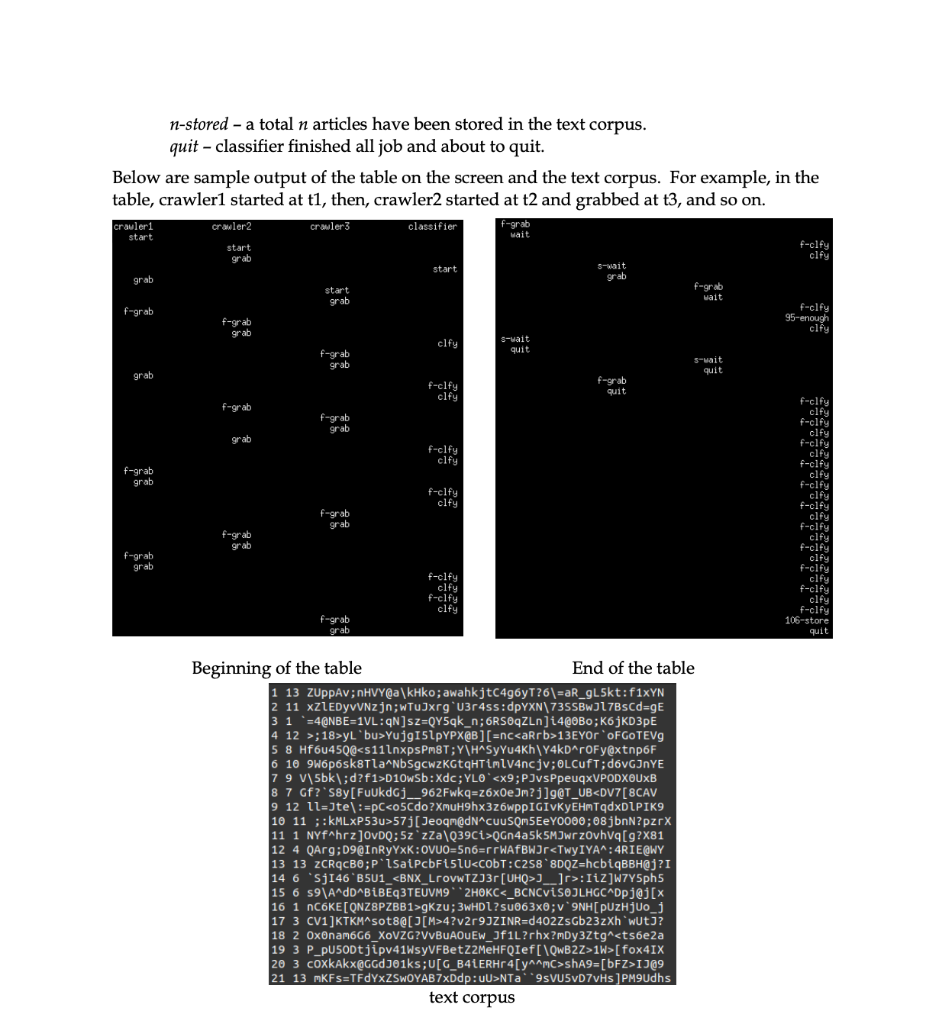

and 3.1 crawler Each crawler thread is created to grab articles from websites and load them into the buffer. It keeps doing grabbing and loading job, which takes time interval_A, until the buffer is full. And then it starts waiting until the classifier deletes an article from the buffer. A function chart str_generator (void), is provided to generate articles for the crawler to grab and each article is represented by a string of 50 characters. 3.2 buffer The buffer structure is a first-in-first-out (FIFO) queue. It is used to store the grabbed articles from crawlers temporarily, until they are taken by the classifier. It can store up to 12 articles at the same time. You need to implement your own queue. You are not allowed to use standard c++ library (e.g., queue or other container provided by standard template library) or third-party libraries. 3.3 classifier A classifier thread is created to classify the articles grabbed by the crawlers in FIFO order. Specifically, there are two steps in the procedure: 1. Pre-processing the classifier makes a copy of the article at the head of the buffer, changes all the uppercase letter ('A'-'Z') to lowercase letter ('a-'') and deletes any symbol that is not a letter. 2. Classification: the classifier classifies the article into one of the 13 classes based on the first letter, x, of the processed article as follows. Class label = int(x -'a')%13+1 Next, an auto-increasing key starting from 1 will be given to the classified article. (So, the keys of classified articles are 1, 2, 3,...). At last, the key, the class label and the original article, are stored to the text corpus in a text file. Then, the classifier deletes the classified article in the buffer. The whole procedure takes time of interval_B. 3.4 termination The articles are divided into 13 classes. Denote the number of articles in each class as C1, C2 ... C13, and p = min C, C2, ... C13). When p 25, the classifier notifies all crawlers to quit after finishing the current job at hand, and then the program terminates. 3.5 input arguments Your program has to accept the following two arguments in input order: interval_A, interval_B: integer, unit: microsecond. 3.6 sample outputs The outputs of your program are: A table with multiple columns shown on the screen, each column shows the activities of a single thread in time order, and each row shows only one single activity of a thread. The text corpus, each line consists of a key, a class label and an article separated by a space. All activities that need to be recorded for each thread are listed below, together with their abbreviations. Crawler: start-crawler starts. grab - crawler starts to grab an article. f-grab - an article has been grabbed and loaded into the buffer. wait - crawler starts waiting for available space in the buffer. s-wait - crawler stops waiting quit - crawler finished all job and about to quit. Classifier: start - classifier starts. dfy - classifier starts to classify an article. f-clfy - the article has been classified and deleted from the buffer. k-enough -k number of articles have been classified and the classifier notifies all threads to quit. 2 n-stored - a total n articles have been stored in the text corpus. quit - classifier finished all job and about to quit. Below are sample output of the table on the screen and the text corpus. For example, in the table, crawler1 started at t1, then, crawler2 started at t2 and grabbed at t3, and so on. crauler1 crwler3 crawler2 classifier f-grab wait start start grab f-clfy clfy start grab grab start grab f-grab wait f-grab f-grab grab f-clfy 95-enough clfy clfy s-wait quit f-grab grab suwait quit grab f-clfy clfy f-grab quit f-grab f-grab grab grab f-clfy clfy f-cify clfy f-clfy clfy f-clfy clfy f-clfy f-clfy clfy f-grab grab f-elfy clfy clfy f-grab grab f-grab grab f-grab grab f-clfy clfy f-clfy clfy f-clfy cify f-clfy clfy f-clfy clfy f-clfy 106-store quit f-clfy clfy f-clfy clfy f-arab grab Beginning of the table End of the table 1 13 ZUppAv; nHVY@a|kHko; awahkjtc4g6yT?6\=aR_gL5kt:f1xYN 2 11 xzLEDyvvNzjn;wtuwxrgU3r4ss:dpYXN 7355Bw317BsCd=gE 3 1 '=4@NBE=1VL:qN]sz=QY5qk_n; 6RSOZLn] i4@0B0;K6jKD3pE 4 12 >;18>yL'bu>Yujg151PYPX@B][=nc13EYOR OF GOTEVO 5 8 Hf6u45Q@D10wsb:Xdc;YLO'57j[Jeoqm@dNCuUSQm5EeY0000; 08jbnN?pzrX 11 1 NYfAhrz]OVDQ; 5z 'zza Q39Ci>QGn4a5k5MJwrzovhvq[g?X81 12 4 QArg;D9@InRyYxK:OVUO=5n6=rrWAFBWJrsTwyIYAM:4RIE@WY 13 13 ZCRqCBO;P'lsaiPcbFislu3__]r>:1iZ]w7Y5ph5 15 6 59\AndDBiBEq3TEUVM9* *2HOKC<_bcncvisojlhgc nc6ke>gkzu; 3WHD1?Su063x0;v9NH[PUzHjuo_j 17 3 CV1]KTKMsot8@[J[M>4?v2r9JZINR=d402ZsGb23zxh wutJ? 18 2 Oxonam666_XOVZG?VVBUAOUEw_Jf1L?rhx?mDy3Ztg^1W>[fox4IX 20 3 coxkAkx@GGDJO1ks;U[G_B4iERHr4[ya-mc>shA9=[bFZ>IJ@9 21 13 mKFS=TFdYxZSWOYAB7xDdp: UU>NTA* *9sVU5vD7vHs] PM9Udhs text corpus CS3103: Operating Systems Spring 2021 Programming Assignment 2 1 Goals The purpose of this assignment is to help you: get familiar with multi-threaded programming using pthread get familiar with mutual exclusion using mutexes get familiar with synchronization using semaphores 2 Background Sentiment analysis, which is a powerful technique based on natural language processing, has a wide range of applications, including consumer reviews analysis, recommender system, political campaigning, stock speculation, etc. A sentiment analysis model requires a large text corpus, which consists of classified articles grabbed from the internet using web crawlers. In the simplest scenario, a text corpus can be built by two components: a web crawler and a classifier. The crawler browse through web pages and grabs articles from websites. The grabbed articles are stored in a buffer, from which the classifier processes articles and classifies them. Considering the complexity of modern websites, it usually takes a long time for a crawler to locate and grab an article from the web page. So, the speed of crawlers is usually too slow for the classifier. Thus, multiple crawlers would be a better choice. 3 Components and Requirements You are required to design and implement three crawlers, a buffer and a classifier in C/C++ on Linux (other languages are not allowed). Mutual exclusion and synchronization must be done with mutex and semaphore provided in libraries and 3.1 crawler Each crawler thread is created to grab articles from websites and load them into the buffer. It keeps doing grabbing and loading job, which takes time interval_A, until the buffer is full. And then it starts waiting until the classifier deletes an article from the buffer. A function chart str_generator (void), is provided to generate articles for the crawler to grab and each article is represented by a string of 50 characters. 3.2 buffer The buffer structure is a first-in-first-out (FIFO) queue. It is used to store the grabbed articles from crawlers temporarily, until they are taken by the classifier. It can store up to 12 articles at the same time. You need to implement your own queue. You are not allowed to use standard c++ library (e.g., queue or other container provided by standard template library) or third-party libraries. 3.3 classifier A classifier thread is created to classify the articles grabbed by the crawlers in FIFO order. Specifically, there are two steps in the procedure: 1. Pre-processing the classifier makes a copy of the article at the head of the buffer, changes all the uppercase letter ('A'-'Z') to lowercase letter ('a-'') and deletes any symbol that is not a letter. 2. Classification: the classifier classifies the article into one of the 13 classes based on the first letter, x, of the processed article as follows. Class label = int(x -'a')%13+1 Next, an auto-increasing key starting from 1 will be given to the classified article. (So, the keys of classified articles are 1, 2, 3,...). At last, the key, the class label and the original article, are stored to the text corpus in a text file. Then, the classifier deletes the classified article in the buffer. The whole procedure takes time of interval_B. 3.4 termination The articles are divided into 13 classes. Denote the number of articles in each class as C1, C2 ... C13, and p = min C, C2, ... C13). When p 25, the classifier notifies all crawlers to quit after finishing the current job at hand, and then the program terminates. 3.5 input arguments Your program has to accept the following two arguments in input order: interval_A, interval_B: integer, unit: microsecond. 3.6 sample outputs The outputs of your program are: A table with multiple columns shown on the screen, each column shows the activities of a single thread in time order, and each row shows only one single activity of a thread. The text corpus, each line consists of a key, a class label and an article separated by a space. All activities that need to be recorded for each thread are listed below, together with their abbreviations. Crawler: start-crawler starts. grab - crawler starts to grab an article. f-grab - an article has been grabbed and loaded into the buffer. wait - crawler starts waiting for available space in the buffer. s-wait - crawler stops waiting quit - crawler finished all job and about to quit. Classifier: start - classifier starts. dfy - classifier starts to classify an article. f-clfy - the article has been classified and deleted from the buffer. k-enough -k number of articles have been classified and the classifier notifies all threads to quit. 2 n-stored - a total n articles have been stored in the text corpus. quit - classifier finished all job and about to quit. Below are sample output of the table on the screen and the text corpus. For example, in the table, crawler1 started at t1, then, crawler2 started at t2 and grabbed at t3, and so on. crauler1 crwler3 crawler2 classifier f-grab wait start start grab f-clfy clfy start grab grab start grab f-grab wait f-grab f-grab grab f-clfy 95-enough clfy clfy s-wait quit f-grab grab suwait quit grab f-clfy clfy f-grab quit f-grab f-grab grab grab f-clfy clfy f-cify clfy f-clfy clfy f-clfy clfy f-clfy f-clfy clfy f-grab grab f-elfy clfy clfy f-grab grab f-grab grab f-grab grab f-clfy clfy f-clfy clfy f-clfy cify f-clfy clfy f-clfy clfy f-clfy 106-store quit f-clfy clfy f-clfy clfy f-arab grab Beginning of the table End of the table 1 13 ZUppAv; nHVY@a|kHko; awahkjtc4g6yT?6\=aR_gL5kt:f1xYN 2 11 xzLEDyvvNzjn;wtuwxrgU3r4ss:dpYXN 7355Bw317BsCd=gE 3 1 '=4@NBE=1VL:qN]sz=QY5qk_n; 6RSOZLn] i4@0B0;K6jKD3pE 4 12 >;18>yL'bu>Yujg151PYPX@B][=nc13EYOR OF GOTEVO 5 8 Hf6u45Q@D10wsb:Xdc;YLO'57j[Jeoqm@dNCuUSQm5EeY0000; 08jbnN?pzrX 11 1 NYfAhrz]OVDQ; 5z 'zza Q39Ci>QGn4a5k5MJwrzovhvq[g?X81 12 4 QArg;D9@InRyYxK:OVUO=5n6=rrWAFBWJrsTwyIYAM:4RIE@WY 13 13 ZCRqCBO;P'lsaiPcbFislu3__]r>:1iZ]w7Y5ph5 15 6 59\AndDBiBEq3TEUVM9* *2HOKC<_bcncvisojlhgc nc6ke>gkzu; 3WHD1?Su063x0;v9NH[PUzHjuo_j 17 3 CV1]KTKMsot8@[J[M>4?v2r9JZINR=d402ZsGb23zxh wutJ? 18 2 Oxonam666_XOVZG?VVBUAOUEw_Jf1L?rhx?mDy3Ztg^1W>[fox4IX 20 3 coxkAkx@GGDJO1ks;U[G_B4iERHr4[ya-mc>shA9=[bFZ>IJ@9 21 13 mKFS=TFdYxZSWOYAB7xDdp: UU>NTA* *9sVU5vD7vHs] PM9Udhs text corpus