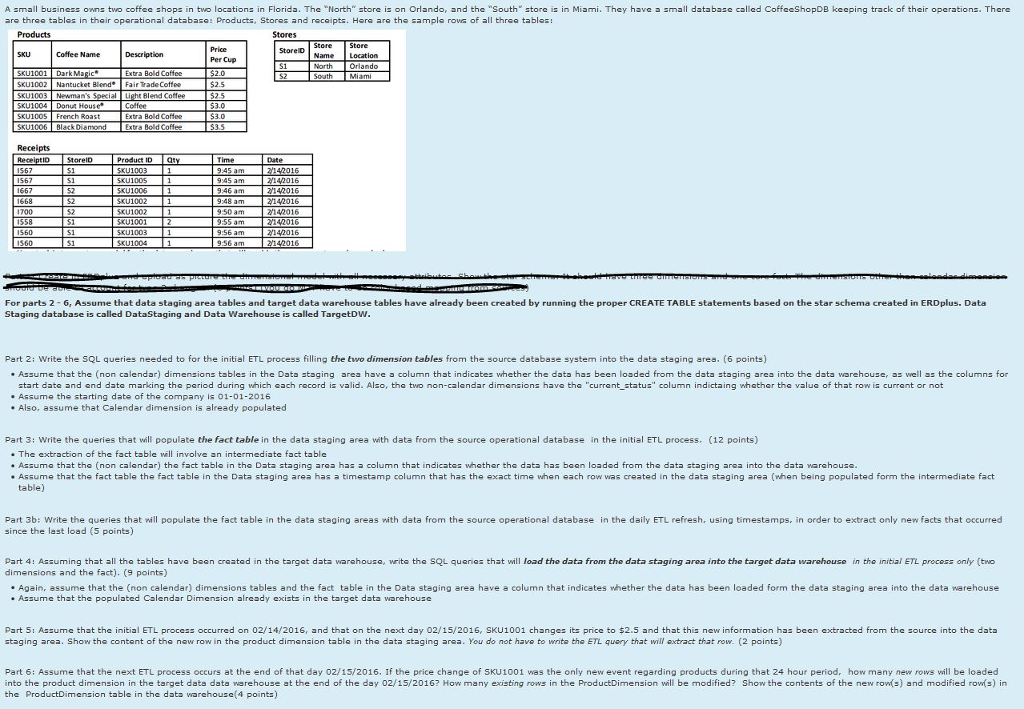

Question: A small business owns two coffee shops in two locations in Florida. The North store is on Orlando, and the South store is in Miami.

A small business owns two coffee shops in two locations in Florida. The North" store is on Orlando, and the "South store is in Miami. They have a small database called CoffeeShopDB keeping track of their operations. There are three tables in their operational database: products, Store nd receipts. Here are th? ample rows of all three tables: Stores Price Per Cup StoreDame Location Coffee Name NorthOrlando SKU1002 Nantucket Blend Fair Trade Cotfe SKU1004 Donut House" SxU1006 Black Diamond Receipts Colfee Extra Bold Coffee ReceiptID SorelD Product ID a 2/142016 700 S2 SKU1002 5KU100 9:50 am 2142016 560 For parts 2 6, Assume that data staging area tables and target data warehouse tables have already been created by running the proper CREATE TABLE statements based on the star schema created in ERDplus. Data Staging database is called DataStaging and Data Warehouse is called TargetDW Part 2: Write the SQL queries needed to for the initial ETL process filling the two dimension tables from the source database system into the data staging ares. (6 points) . Assume that the (non calendar) dimensions tobles in the Data staging area have a column that indicates whether the data has been loaded from the data staging area into the data warehouse, as well as the columns for start date and end date marking the pariod during which each record is valid. Also, the two non-calendar dimansions have the "currant status" column indictaing whather the value of that row is currant or not . Assume the starting date of the company is 01-01-2016 . Also, assume that Calendar dimension is already populated Part 3: Write the queries that will populate the fact table in the data staging area with data from the source operational database in the initial ETL process. (12 points] . The extraction of the fact table will involve an intermediate fact table Assume that the (non calandar) the fact table in the Data staging area has a column that indicates whather the data has bean loaded from the data staging area into the data warehouse . Assume that the fact table the fact table in the Data staging area has a timestamp column that has the exact time when each row was created in the data staging area (when being populated form the intermediate fact table) Part 3b: Write the queries that ill populate the fact table in the data staging areas with data from the source operational database in the daily ETL refresh, using timestamps, in order to extract only new facts that occurred since the last load (5 points) part 4: Assuming that all the tables have been created in the target data warehouse, write the S L quenes that will load tho data from the data staging area into the target data warehouse ?n the initia/ ETL process only (two dimensions and the fact). (9 points) . Again, assume that the (non calendar) dimensions tables and the fact table in the Data staging area have a column that indicates whether the data has been loaded form the data staging area into the data warehouse . Assume that the populated Calendar Dimension already exists in the target data worchouse Part 5: Assume that the initial ETL process occurred?n 02/14/2016, and that on the next day 02/15/2016, SKU 1001 ch nges its price to $2.5 and that this new information has been extracted from the source into the data staging area. Show the content of the new row in the product dimansion tablo in the data staging area. You do not have to writo tho ETL query that will axtract that row. (2 points) Part 6: Assume that the next ETL process occurs at the end of that day 02/15/2016. If the price change of SKU 1001 was the only new event regardin? products during that 24 hour period, how many new rows will be loaded into the product dimension in the target data data warehouse at the end of the day 02/15/2016? How many existing rows in the ProductDimension will be modified? Show the contents of the new rows) and modified rows) in the ProductDimension table in the data warehouse(4 points) A small business owns two coffee shops in two locations in Florida. The North" store is on Orlando, and the "South store is in Miami. They have a small database called CoffeeShopDB keeping track of their operations. There are three tables in their operational database: products, Store nd receipts. Here are th? ample rows of all three tables: Stores Price Per Cup StoreDame Location Coffee Name NorthOrlando SKU1002 Nantucket Blend Fair Trade Cotfe SKU1004 Donut House" SxU1006 Black Diamond Receipts Colfee Extra Bold Coffee ReceiptID SorelD Product ID a 2/142016 700 S2 SKU1002 5KU100 9:50 am 2142016 560 For parts 2 6, Assume that data staging area tables and target data warehouse tables have already been created by running the proper CREATE TABLE statements based on the star schema created in ERDplus. Data Staging database is called DataStaging and Data Warehouse is called TargetDW Part 2: Write the SQL queries needed to for the initial ETL process filling the two dimension tables from the source database system into the data staging ares. (6 points) . Assume that the (non calendar) dimensions tobles in the Data staging area have a column that indicates whether the data has been loaded from the data staging area into the data warehouse, as well as the columns for start date and end date marking the pariod during which each record is valid. Also, the two non-calendar dimansions have the "currant status" column indictaing whather the value of that row is currant or not . Assume the starting date of the company is 01-01-2016 . Also, assume that Calendar dimension is already populated Part 3: Write the queries that will populate the fact table in the data staging area with data from the source operational database in the initial ETL process. (12 points] . The extraction of the fact table will involve an intermediate fact table Assume that the (non calandar) the fact table in the Data staging area has a column that indicates whather the data has bean loaded from the data staging area into the data warehouse . Assume that the fact table the fact table in the Data staging area has a timestamp column that has the exact time when each row was created in the data staging area (when being populated form the intermediate fact table) Part 3b: Write the queries that ill populate the fact table in the data staging areas with data from the source operational database in the daily ETL refresh, using timestamps, in order to extract only new facts that occurred since the last load (5 points) part 4: Assuming that all the tables have been created in the target data warehouse, write the S L quenes that will load tho data from the data staging area into the target data warehouse ?n the initia/ ETL process only (two dimensions and the fact). (9 points) . Again, assume that the (non calendar) dimensions tables and the fact table in the Data staging area have a column that indicates whether the data has been loaded form the data staging area into the data warehouse . Assume that the populated Calendar Dimension already exists in the target data worchouse Part 5: Assume that the initial ETL process occurred?n 02/14/2016, and that on the next day 02/15/2016, SKU 1001 ch nges its price to $2.5 and that this new information has been extracted from the source into the data staging area. Show the content of the new row in the product dimansion tablo in the data staging area. You do not have to writo tho ETL query that will axtract that row. (2 points) Part 6: Assume that the next ETL process occurs at the end of that day 02/15/2016. If the price change of SKU 1001 was the only new event regardin? products during that 24 hour period, how many new rows will be loaded into the product dimension in the target data data warehouse at the end of the day 02/15/2016? How many existing rows in the ProductDimension will be modified? Show the contents of the new rows) and modified rows) in the ProductDimension table in the data warehouse(4 points)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts