Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Consider the fully recurrent network architecture ( without output activation and bias units ) defined as s ( t ) = W x ( t

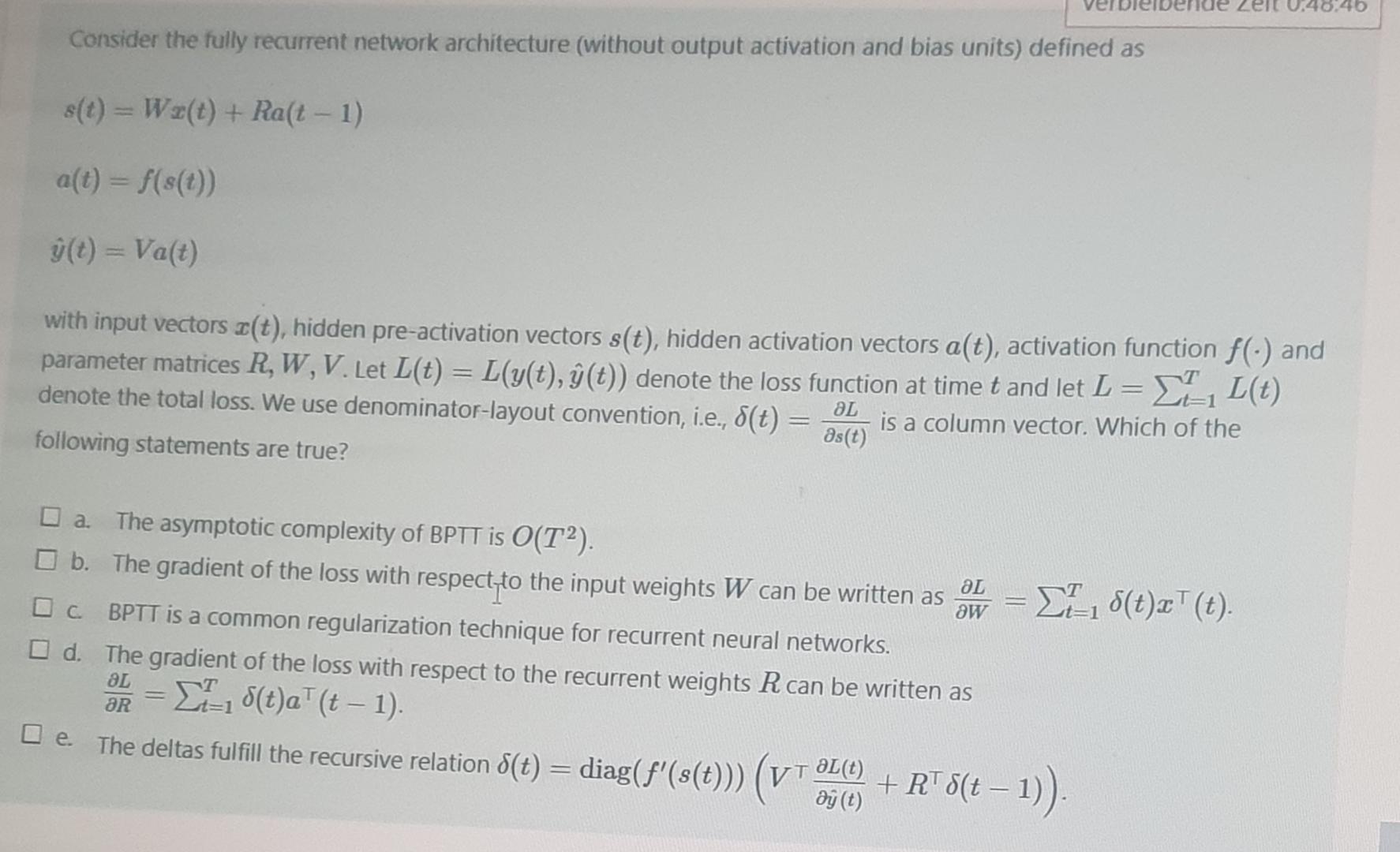

Consider the fully recurrent network architecture without output activation and bias units defined as

hat

with input vectors hidden preactivation vectors hidden activation vectors activation function and parameter matrices Let hat denote the loss function at time and let denote the total loss. We use denominatorlayout convention, ie is a column vector. Which of the following statements are true?

a The asymptotic complexity of BPTT is

b The gradient of the loss with respect,to the input weights can be written as

c BPTT is a common regularization technique for recurrent neural networks.

d The gradient of the loss with respect to the recurrent weights can be written as

e The deltas fulfill the recursive relation diag

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started