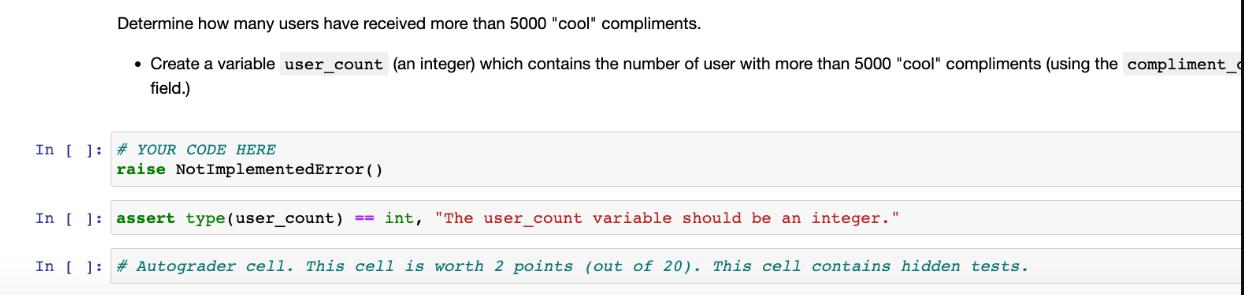

Question: Determine how many users have received more than 5000 cool compliments. Create a variable user_count (an integer) which contains the number of user with

![more than 5000 "cool" compliments (using the compliment_ field.) In [ ]](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2023/09/64f2de3c8755a_1693638203571.jpg)

![# YOUR CODE HERE raise NotImplementedError() In [ ] assert type (user_count)](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2023/09/64f2de49e5d06_1693638216896.jpg)

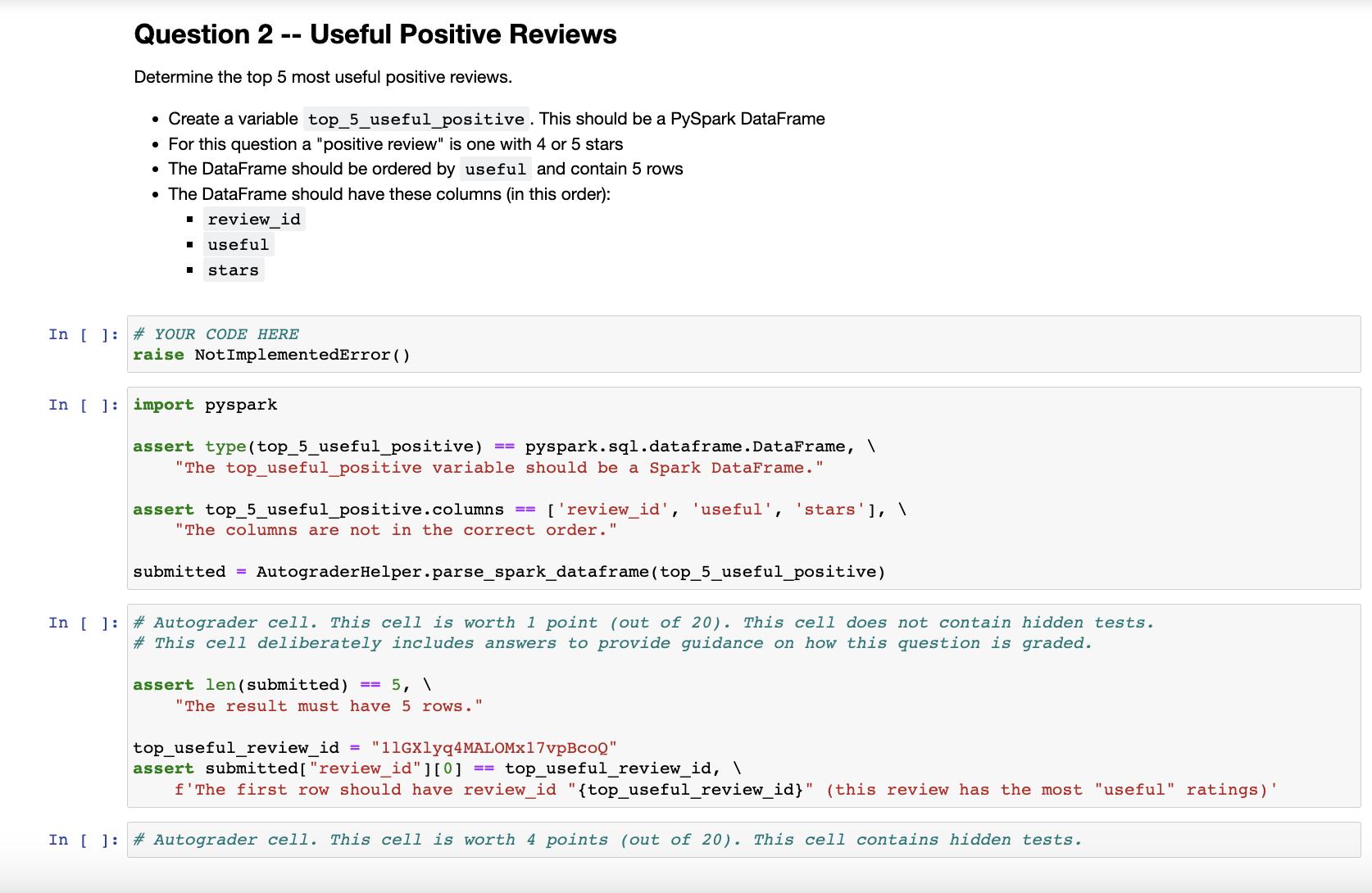

Determine how many users have received more than 5000 "cool" compliments. Create a variable user_count (an integer) which contains the number of user with more than 5000 "cool" compliments (using the compliment_ field.) In [ ] # YOUR CODE HERE raise NotImplementedError() In [ ] assert type (user_count) == int, "The user_count variable should be an integer." In [ ] # Autograder cell. This cell is worth 2 points (out of 20). This cell contains hidden tests. Question 2 Determine the top 5 most useful positive reviews. Create a variable top_5_useful_positive. This should be a PySpark DataFrame . For this question a "positive review" is one with 4 or 5 stars The DataFrame should be ordered by useful and contain 5 rows The DataFrame should have these columns (in this order): review_id useful I stars. -- In [ ] # YOUR CODE HERE Useful Positive Reviews In [] import pyspark raise NotImplementedError() assert type (top_5_useful_positive) pyspark.sql.dataframe.DataFrame, \ "The top_useful_positive variable should be a Spark DataFrame." == assert top_5_useful_positive.columns "The columns are not in the correct order.' ['review_id', 'useful', 'stars'], \ submitted = AutograderHelper.parse_spark_dataframe (top_5_useful_positive) assert len (submitted) == 5, \ In [ ] # Autograder cell. This cell is worth 1 point (out of 20). This cell does not contain hidden tests. # This cell deliberately includes answers to provide guidance on how this question is graded. "The result must have 5 rows." top_useful_review_id = "11GX1yq4MALOMx17vpBcOQ" assert submitted [ "review_id"][0] ==top_useful_review_id, \ f'The first row should have review_id "{top_useful_review_id}" (this review has the most "useful" ratings) In [] #Autograder cell. This cell is worth 4 points (out of 20). This cell contains hidden tests. Question 3 -- Checkins Determine what hours of the day most checkins occur. Create a variable hours_by_checkin_count. This should be a PySpark DataFrame The DataFrame should be ordered by count and contain 24 rows The DataFrame should have these columns (in this order): hour (the hour of the day as an integer, the hour after midnight being 0) count (the number of checkins that occurred in that hour) Note that the date column in the checkin data is a string with multiple date times in it. You'll need to split that string before parsing. In [ ] # YOUR CODE HERE raise NotImplementedError() In [ ]: assert type (hours_by_checkin_count) == pyspark.sql.dataframe. DataFrame, "The hours_by_checkin_count variable should be a Spark DataFrame." assert hours_by_checkin_count.columns == ["hour", "count"], \ "The columns are not in the correct order." submitted = AutograderHelper.parse_spark_dataframe (hours_by_checkin_count) In [ ] #Autograder cell. This cell is worth 1 point (out of 20). This cell does not contain hidden tests. assert len (submitted) == 24, \ "The hours_by_checkin_count DataFrame must have 24 rows. assert submitted [ "hour"][0] == 1, \ 'The first row should have hour 1' In [ ] # Autograder cell. This cell is worth 4 points (out of 20). This cell contains hidden tests. Question 4 -- Common Words in Useful Reviews Write function that takes a Spark DataFrame as a parameter and returns a Spark DataFrame of the 50 most common words from useful reviews and their counts. A "useful review" has 10 or more "useful" ratings. . Convert the text to lower case. Use the provided splitter() function in a UDF to split the text into individual words. Exclude the words in the provided STOP WORDS set. Returned DataFrame should have these columns (in this order): word . count Returned DataFrame should be sorted by count in descending order. . } . In [ ] import re def splitter (text): WORD_RE= re.compile(r"[\w']+") return WORD_RE.findall(text) STOP WORDS = { a "about", "above", "after", "again", "against", "aint", "all", "also", "although", "am", "an", "and", "any", "are", "as", "at", "be", "because", "been", "before", "being", "below", "between", "both", "but", "by", "can", "check", "checked", "could", "did", "do", "does", "doing", "don", "down", "during", "each", "few", "for", "from", "further", "get", "go", "got", "had", "has", "have", "having", "he", "her", "here", "hers", "herself", "him", "himself", "his", "how", "however", "i", "i'd", "if", "i'm", "in", "into", "is", "it", "its", "it's", "itself", "i've", "just", "me", "more", "most", "my", "myself", "no", "nor", "not", "now", "of", "off", "on", "once", "one", "online", "only", "or", "other", "our", "ours", "ourselves", "out", "over", "own", "paid", "place", "s", "said", "same", "service", "she", "should", "so", "some", "such", "t", "than", "that", "the", "their", "theirs", "them", "themselves", "then", "there", "these", "they", "this", "those", "through", "to", "too", "under", "until", "up", "us", "very", "was", "we", "went", "were", "we've", "what", "when", "where", "which", "while", "who", "whom", "why", "will", "with", "would", "you", "your", "yours", "yourself", "yourselves", def common_useful_words (reviews, limit=50): #YOUR CODE HERE raise Not ImplementedError() return most common Now we'll run it on the review DataFrame In [ ]: common_useful_words_counts = common_useful_words (review) In [ ] assert type (common_useful_words_counts) == pyspark.sql.dataframe.DataFrame, \ "The common_useful_words_counts variable should be a Spark DataFrame." assert common_useful_words_counts.columns == ["word", "count"], \ "The columns are not in the correct order." submitted = AutograderHelper.parse_spark_dataframe (common_useful_words_counts) In [ ] # Autograder cell. This cell is worth 2 points (out of 20). This cell does not contain hidden tests. assert len (submitted) == 50, \ "The common_useful_words_counts DataFrame must have 50 rows." assert submitted [ "word"][0] == 'like', \ 'The first row should have word "like"" assert submitted [ "count"][0]==101251, \ 'The first row should have count 101251' In [ 1: # Autograder cell I This cell is worth 6 points out of 201 This cell contains hidden tests.

Step by Step Solution

3.38 Rating (148 Votes )

There are 3 Steps involved in it

1Here is the code to determine how many users have received more than 5000 cool compliments PYTHON from pysparksql import SparkSession spark SparkSessionbuilderappNameCool ComplimentsgetOrCreate Load ... View full answer

Get step-by-step solutions from verified subject matter experts