Question: Let's consider an MDP defined by the set of states ? = {-1, 0, +1, +2, +3). The start state is Sstart1. The set of

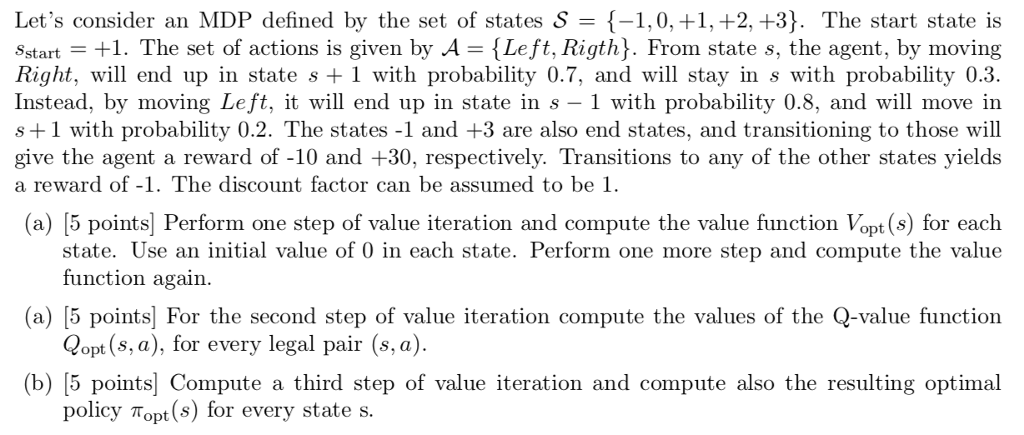

Let's consider an MDP defined by the set of states ? = {-1, 0, +1, +2, +3). The start state is Sstart1. The set of actions is given by A Left, Rigth). From state s, the agent, by moving Right, will end up in state s1 with probability 0.7, and will stay in s with probability 0.3. Instead, by moving Left, it will end up in state in s - 1 with probability 0.8, and will move in s +1 with probability 0.2. The states -1 and +3 are also end states, and transitioning to those will give the agent a reward of -10 and +30, respectively. Transitions to any of the other states yields a reward of -1. The discount factor can be assumed to be 1 (a) 5 points Perform one step of value iteration and compute the value function Vopt (s) for each state. Use an initial value of 0 in each state. Perform one more step and compute the value (a) 5 points] For the second step of value iteration compute the values of the Q-value function (b) [5 points] Compute a third step of value iteration and compute also the resulting optimal function again opt(s, a), for every legal pair (s, a). policy ?0pt(s) for every state s. Let's consider an MDP defined by the set of states ? = {-1, 0, +1, +2, +3). The start state is Sstart1. The set of actions is given by A Left, Rigth). From state s, the agent, by moving Right, will end up in state s1 with probability 0.7, and will stay in s with probability 0.3. Instead, by moving Left, it will end up in state in s - 1 with probability 0.8, and will move in s +1 with probability 0.2. The states -1 and +3 are also end states, and transitioning to those will give the agent a reward of -10 and +30, respectively. Transitions to any of the other states yields a reward of -1. The discount factor can be assumed to be 1 (a) 5 points Perform one step of value iteration and compute the value function Vopt (s) for each state. Use an initial value of 0 in each state. Perform one more step and compute the value (a) 5 points] For the second step of value iteration compute the values of the Q-value function (b) [5 points] Compute a third step of value iteration and compute also the resulting optimal function again opt(s, a), for every legal pair (s, a). policy ?0pt(s) for every state s

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts