Answered step by step

Verified Expert Solution

Question

1 Approved Answer

(Machine Learning) Serious answer only please, better with explanation, thanks ! When we have multiple independent outputs in linear regression, the model becomes Since the

(Machine Learning) Serious answer only please, better with explanation, thanks !

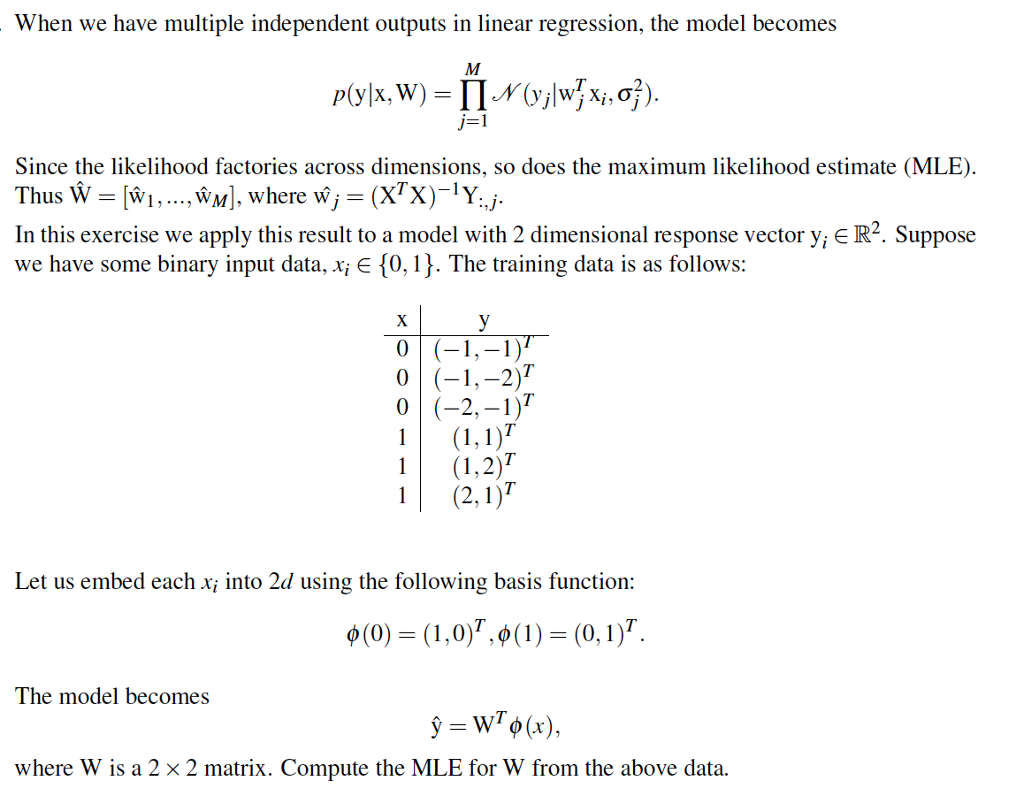

When we have multiple independent outputs in linear regression, the model becomes Since the likelihood factories across dimensions, so does the maximum likelihood estimate (MLE) Thus w = [wi , , wM), where w,-(XTX)-1 Y.J In this exercise we apply this result to a model with 2 dimensional response vector y, E R2. Suppose we have some binary input data, x; E 10, 1). The training data is as follows: Let us embed each xi into 2d using the following basis function: The model becomes where W is a 2x 2 matrix. Compute the MLE for W from the above data When we have multiple independent outputs in linear regression, the model becomes Since the likelihood factories across dimensions, so does the maximum likelihood estimate (MLE) Thus w = [wi , , wM), where w,-(XTX)-1 Y.J In this exercise we apply this result to a model with 2 dimensional response vector y, E R2. Suppose we have some binary input data, x; E 10, 1). The training data is as follows: Let us embed each xi into 2d using the following basis function: The model becomes where W is a 2x 2 matrix. Compute the MLE for W from the above data

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started