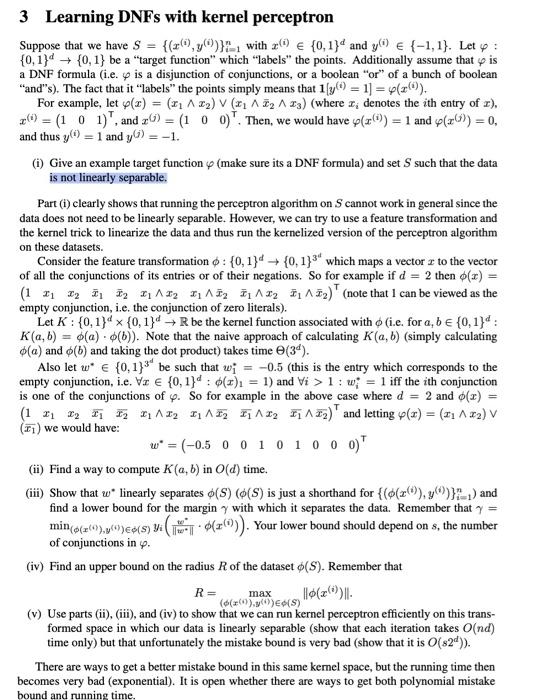

3 Learning DNFs with kernel perceptron Suppose that we have s = {(10,y)}, with 20 {0,134 and y() {-1,1). Let y: {0,1} + {0,1} be a "target function" which "labels" the points. Additionally assume that is a DNF formula (i.e. y is a disjunction of conjunctions, or a boolean "or" of a bunch of boolean "and"s). The fact that it "labels" the points simply means that 1 y") = 1) = 4(). For example, let y(t) = (21 422) V (1 112 113) (where 1, denotes the ith entry of 1), 1 : w; = 1 iff the ith conjunction is one of the conjunctions of y. So for example in the above case where d = 2 and 6(x) = (1 11 12 13 14 12 11 112 1112 f1 122) and letting y(x) = (31 142) V (II) we would have: w* = (-0.5 0 0 1 0 1 0 0 0 (ii) Find a way to compute K (a, b) in O(d) time. (iii) Show that w* linearly separates (S) (6(S) is just a shorthand for { $(32%). y)}?_,) and find a lower bound for the margin with which it separates the data. Remember that min(261),69%ees) y (436)). Your lower bound should depend on s, the number of conjunctions in . (iv) Find an upper bound on the radius of the dataset 6(S). Remember that (z ()y)E(S) (v) Use parts (ii), (ii), and (iv) to show that we can run kernel perceptron efficiently on this trans- formed space in which our data is linearly separable (show that each iteration takes O(nd) time only) but that unfortunately the mistake bound is very bad (show that it is O(82d)). There are ways to get a better mistake bound in this same kerel space, but the running time then becomes very bad (exponential). It is open whether there are ways to get both polynomial mistake bound and running time. R= max 3 Learning DNFs with kernel perceptron Suppose that we have s = {(10,y)}, with 20 {0,134 and y() {-1,1). Let y: {0,1} + {0,1} be a "target function" which "labels" the points. Additionally assume that is a DNF formula (i.e. y is a disjunction of conjunctions, or a boolean "or" of a bunch of boolean "and"s). The fact that it "labels" the points simply means that 1 y") = 1) = 4(). For example, let y(t) = (21 422) V (1 112 113) (where 1, denotes the ith entry of 1), 1 : w; = 1 iff the ith conjunction is one of the conjunctions of y. So for example in the above case where d = 2 and 6(x) = (1 11 12 13 14 12 11 112 1112 f1 122) and letting y(x) = (31 142) V (II) we would have: w* = (-0.5 0 0 1 0 1 0 0 0 (ii) Find a way to compute K (a, b) in O(d) time. (iii) Show that w* linearly separates (S) (6(S) is just a shorthand for { $(32%). y)}?_,) and find a lower bound for the margin with which it separates the data. Remember that min(261),69%ees) y (436)). Your lower bound should depend on s, the number of conjunctions in . (iv) Find an upper bound on the radius of the dataset 6(S). Remember that (z ()y)E(S) (v) Use parts (ii), (ii), and (iv) to show that we can run kernel perceptron efficiently on this trans- formed space in which our data is linearly separable (show that each iteration takes O(nd) time only) but that unfortunately the mistake bound is very bad (show that it is O(82d)). There are ways to get a better mistake bound in this same kerel space, but the running time then becomes very bad (exponential). It is open whether there are ways to get both polynomial mistake bound and running time. R= max