Question: Let us define a gridworld MDP , depicted in Figure 2 . The states are grid squares, identified by their row and column number (

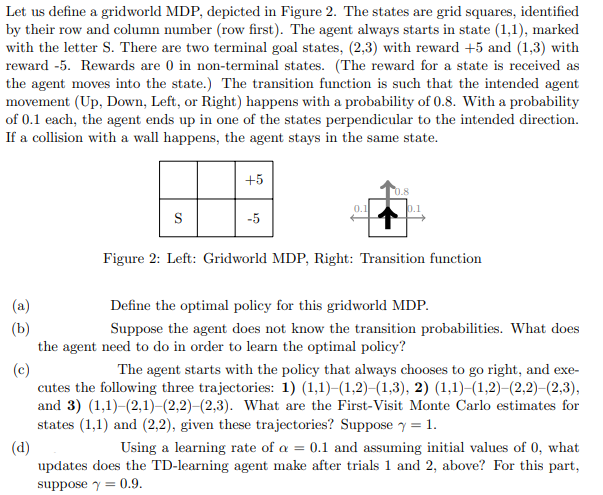

Let us define a gridworld MDP depicted in Figure The states are grid squares, identified

by their row and column number row first The agent always starts in state marked

with the letter S There are two terminal goal states, with reward and with

reward Rewards are in nonterminal states. The reward for a state is received as

the agent moves into the state. The transition function is such that the intended agent

movement Up Down, Left, or Right happens with a probability of With a probability

of each, the agent ends up in one of the states perpendicular to the intended direction.

If a collision with a wall happens, the agent stays in the same state.

Figure : Left: Gridworld MDP Right: Transition function

a

Define the optimal policy for this gridworld MDP

b

Suppose the agent does not know the transition probabilities. What does

the agent need to do in order to learn the optimal policy?

c

The agent starts with the policy that always chooses to go right, and exe

cutes the following three trajectories:

and What are the FirstVisit Monte Carlo estimates for

states and given these trajectories? Suppose

d

Using a learning rate of and assuming initial values of what

updates does the TDlearning agent make after trials and above? For this part,

suppose

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock